January 15, 2026

In this article, Alexis Yang (https://www.linkedin.com/in/alexis-yang-s/) gives us a look into her time as an intern at Amass after finishing her Master’s degree at MIT in Computation and Cognition. Alexis shares her expectations, what it’s like to intern in Copenhagen, and how she developed Amass’s trial agent, from inception to launch.

This summer, I had the opportunity to work at Amass Technologies as an AI Engineering intern. Moving from Boston to Copenhagen for the role was both an exciting adventure and a big transition. Additionally, coming from a research-focused background, I was eager to dive into building AI tools that support discovery and decision-making in the biotech and pharma scene in Copenhagen!

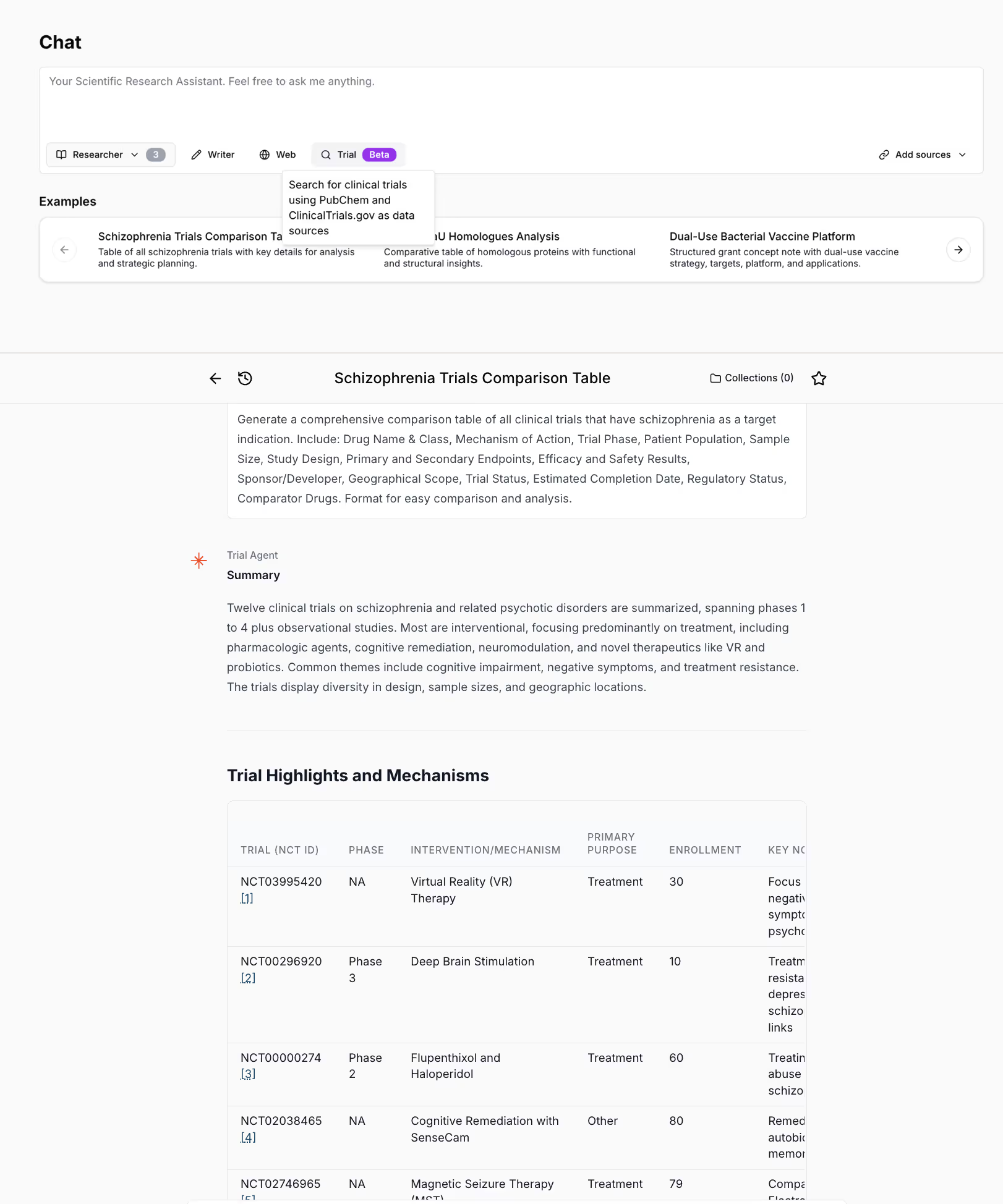

My project was to onboard ClinicalTrials.gov as a data source in Amass and build an AI agent in chat to retrieve and extract trial facts (design choices, endpoints, treatment arms, eligibility) on demand. With many users of the Amass platform entering trial phases, a clinical trials integration was highly requested. Moreover, users perceived the ClinicalTrials.gov interface as clunky and it made key details tedious to find, requiring them to scroll through long trial pages to compare information.

As an intern, I didn’t expect to own a user-facing feature, but as I got started, I realized this would be a great learning experience; it was an opportunity to get hands-on experience with agentic AI, exposure in how biotech teams evaluate trials, and a chance to ship something impactful. Over the next few months I partnered with Alex and Ruben of the engineering team to bring this feature to life.

I started with quick field research. Sharing an office with many customers made user conversations easy. After six user interviews, the core requirements became clear.

This feature needed to have a few key functionalities:

A key challenge of clinicaltrials.gov as an enduser is its complex user interface, and that it uses keyword search. We found that the keywords used in search can greatly affect which trials are found, making it easy to miss out on relevant studies on clinicaltrials.gov. To overcome this, we needed a system that could not only expand queries with alternative search terms to capture all potentially relevant trials, but also evaluate the results and decide which ones were truly relevant. This was a perfect opportunity to leverage AI agents.

AI Agents are essentially systems designed to interact with their environment in order to achieve defined objectives. Unlike traditional systems that follow fixed, linear workflows, AI agents are dynamic and adaptable. They can assess a task, choose the right tools, and loop back to try different approaches until they reach a good result.

This flexibility is especially valuable for our use case, since it is not uncommon for the first attempt to not surface the right trials. Traditional systems are rigid: once they commit to a path, they do not easily pivot or explore alternatives unless they are explicitly programmed to do so. However, exhaustively exploring every possible path can be costly. An agentic approach would be able to offer a middle ground, able change course when needed without brute-forcing every option

I developed an AI agent equipped with tools to rewrite queries with expanded terms, scan trial results, and identify the most relevant studies. This addresses a major gap in typical trial analysis workflows. For example, I implemented tools that could expand user queries by finding molecule synonyms through PubChem, and capture scientific context using our researcher tool. This helped the agent surface relevant trials that a simple keyword search would miss. Importantly, as this was my first time building an agentic system, I came away with a few key learnings. For instance, I initially tended to pile on new tools to see if they improved the system, but I quickly realized that refining and augmenting a small set of well-designed tools was far more effective than giving the agent too many.

Developing in a startup environment was fast and iterative. I demoed progress internally in weekly meetings, and after a few weeks of development, we shipped a pilot to select users, collected feedback, and iterated quickly.

When building our clinical trial search agent, success hinged as much on evaluation as on retrieval. Thus, setting up an evaluation framework was the first priority, even before building the trial agent. A good evaluation framework can help shape system design while identifying weaknesses.

It was important to know what a “good” answer meant in the context of trials. We narrowed it down to a few key characteristics:

Given users’ ability to triage results quickly, we emphasized recall as the primary evaluation metric, reasoning that missing a relevant trial is more costly than retrieving an extra one. To test this, I built a gold set of real user queries and expanded it with LLM-generated variations covering different diseases and interventions. For each query, we identified the trials that should be retrieved, creating a representative benchmark for systematic evaluation.

Evaluating at multiple levels (retrieval/extraction/final answer) helped pinpoint which tools needed adjustment. I implemented an evaluation workflow tied to AI agent traces, which tracks recall and answer quality across the agent’s workflow. The insights from this process directly shaped development. For instance, poor recall directly led to an expansion of search terms using PubChem molecule aliases, while weak answer quality revealed the need for restructuring information so the agent could reason more effectively.

In the second half of my internship, these evaluations continued to guide our iterations, resulting in the beta launch and a later general release of the trial agent on the Amass platform. It was surreal to see it live as a tool within the Amass platform!

Top: The Amass chat interface, where the user can pose a query and select sources. Here, the Trial Agent is chosen, which queries ClinicalTrials.gov and expands the search with molecule synonyms from PubChem to capture all relevant results. Bottom: The user requests a comprehensive table of schizophrenia trials for comparative analysis. The agent returns a summary and table with trial phases, intervention mechanisms, enrollment numbers, key notes, among other details. This organization allows the user to efficiently review and compare 12 schizophrenia trials in one place, rather than scrolling through lengthy individual trial pages.

While it feels like the internship flew by, when I think back to my first week at Amass, I realize how much I’ve grown. Working at a startup improved my ability to operate under pressure while wearing many hats; from interacting with customers, evaluating the system, and demoing the trial agent feature. It also deepened my understanding of the drug-development lifecycle and gave me the opportunity to design tools aligned with real clinical trial workflows, including trial landscape analysis, trial design comparisons, and endpoint and eligibility tracking.

Most importantly, taking on responsibility for a feature was both challenging and rewarding. Overall, it was an incredible opportunity to learn and grow in just four months.

I’d like to thank the team at Amass - Ruben, Alex, Henrik, Rene, Mads, and Niels - for a wonderful summer. Now, as I head back to Boston, I’m excited to bring all this new knowledge and experience into my research and future endeavors.